How to Search Elasticsearch (ES) Index from ExpressJS

“Search is something that any application should have”

Shay Banon

Overview

Searching is part of our daily lives. Especially in the internet where search engines are everywhere. Therefore, it is a fundamental need for any application to have search functionalities. And today, Elasticsearch is the most popular search engine in applications world.

As you may know, Elasticsearch itself comes with its own REST API. However, the results sometimes are too cumbersome to be parsed by another party. Another concern would be what if Elasticsearch comes with another version and response payloads will be different. Rather than we change all our API caller, it is better if we implement adapter pattern on top of that. In respect to those needs, I am using ExpressJS as our REST API adapter between Elasticsearch and outside ES world.

Why I am using NodeJS? Because it is commonly pure high IO between ES and NodeJS application. No heavy computational needs to parse the payload. But you may face different cases and NodeJS simply not enough.

Use Case

I want to search a collection of books by three criteria, which are by title, by category and by author.

Here is a list of books in my catalogue:

| Book | Category | Author | ||

| First Name | Middle Name | Last Name | ||

| Cracking the Coding Interview | Computer Programming | Gayle | Laakmann | McDowell |

| Sapiens: A Brief History of Humankind | Civilization | Yuval | Noah | Harari |

| Homo Deus: A Brief History of Tomorrow | Civilization | Yuval | Noah | Harari |

And our Book Search API will be:

|

1 2 3 4 5 6 7 8 |

// search by title http://localhost:3000/books/title?keyword=yourkeyword // search by category http://localhost:3000/books/category?keyword=yourkeyword // search by author http://localhost:3000/books/author?keyword=yourkeyword |

ExpressJS

First, we will generate ExpressJS project with name es-sample.

|

1 |

express --no-view es-sample && cd es-sample |

Elasticsearch and Additional Libraries

We use official Elasticsearch 7 libraries and other libraries for compression, security and environment configuration

|

1 2 3 4 5 6 7 8 9 10 11 |

# ES7 library npm install @elastic/elasticsearch --save # Security Header and Compression npm install helmet compression --save # Environment Configurations npm install dotenv --save # Utility Libs npm install lodash –save |

Elasticsearch.js

It is time to configure app.js to setup client connection with Elasticsearch.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

// ES client configuration const client = new Client({ node: process.env.ES_HOST, auth: { apiKey: process.env.API_KEY }, ssl: { ca: [fs.readFileSync(process.env.CA_CERT)], // to read CA certificate rejectUnauthorized: true } }); app.set('client', client); |

Basically, you don’t need to configure SSL part, unless you used self-signed certificate. If you use self-signed, ES connection using .cer format. In general, the CA should consists of CA, Intermediate CA and Root CA.

|

1 2 3 4 |

# CA -> Intermediate CA -> ROOT CA # or in short cat ca.cer intermediate.cer root.cer > dev.cer |

Another important note is apiKey field. API Key should be in Base64, with combination with ID and api_key from host.

How to create API KEY in Elasticsearch, please visit here.

Routes

We have three routes for our book search API. They are routes/title.js, routes/category.js and routes/author.js.

routes/title.js

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

exports.searchTitle = async function(req, res, next) { try { const payload = { index: process.env.ES_CONTRACT_INDEX, body: { "query": { "bool": { "must": [{ "query_string": { "default_field": "title", "query": req.query.keyword || '*' } }] } } } } const from = req.query.from || 0 const size = req.query.size || 10 _.assign(payload, { from: from, size: size }) // Let's search! const client = req.app.get('client') const { body } = await client.search(payload) var results = { content: parseBody(body) .map(function (i) { return _.omit(i._source, 'key') }) } var totalResults = { total: { value: parseTotalHits(body).value, relation: parseTotalHits(body).relation } } writeResponse(res, _.assign(totalResults, results)) next() } catch (err) { errorHandler(req.originalUrl, err.message, next, 500) } }; |

routes/category.js

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

exports.searchCategory = async function(req, res, next) { try { const payload = { index: process.env.ES_CONTRACT_INDEX, body: { "query": { "bool": { "must": [{ "query_string": { "default_field": "category", "query": req.query.keyword || '*' } }] } } } } const from = req.query.from || 0 const size = req.query.size || 10 _.assign(payload, { from: from, size: size }) // Let's search! const client = req.app.get('client') const { body } = await client.search(payload) var results = { content: parseBody(body) .map(function (i) { return _.omit(i._source, 'key') }) } var totalResults = { total: { value: parseTotalHits(body).value, relation: parseTotalHits(body).relation } } writeResponse(res, _.assign(totalResults, results)) next() } catch (err) { errorHandler(req.originalUrl, err.message, next, 500) } }; |

routes/author.js

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

exports.searchAuthor = async function(req, res, next) { try { const payload = { index: process.env.ES_CONTRACT_INDEX, body: { "query": { "bool": { "must": [{ "query_string": { "fields": ["author.first_name","author.middle_name","author.last_name"], "query": req.query.keyword || '*' } }] } } } } const from = req.query.from || 0 const size = req.query.size || 10 _.assign(payload, { from: from, size: size }) // Let's search! const client = req.app.get('client') const { body } = await client.search(payload) var results = { content: parseBody(body) .map(function (i) { return _.omit(i._source, 'key') }) } var totalResults = { total: { value: parseTotalHits(body).value, relation: parseTotalHits(body).relation } } writeResponse(res, _.assign(totalResults, results)) next() } catch (err) { errorHandler(req.originalUrl, err.message, next, 500) } }; |

Run

Before we execute our node application, we need to run ES on Docker and export our testing data.

|

1 2 3 4 5 |

# Run ES docker run -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" docker.elastic.co/elasticsearch/elasticsearch:7.6.1 # Export test data curl -H 'Content-Type: application/x-ndjson' -XPOST 'localhost:9200/books/_bulk?pretty' --data-binary @sample-data/sample.ndjson |

Next, execute es-sample.

|

1 |

NODE_ENV=dev npm start |

Then begin searching.

|

1 2 3 4 |

# I'm using httpie http GET http://localhost:3000/books/title?keyword=sapiens http GET http://localhost:3000/books/category keyword==computer http GET http://localhost:3000/books/author keyword==noah |

Advance Topic

This advance topic will talk about putting es-sample project into Jenkins pipeline. As usual, the basic foundation of good CI/CD practice is to have test automation and good code coverage in place. Therefore, first we need to add testing libraries.

Testing Libraries

For testing, I am using mocha, chai, chai-http and istanbuljs.

|

1 |

npm install chai chai-http nyc mocha --save-dev |

And add this step into package.json.

|

1 2 3 4 5 |

"scripts": { "start": "node ./bin/www", "test": "mocha 'test/**/*.js' --timeout 10000 ", "coverage": "nyc npm test" } |

Execute your test manually.

|

1 2 |

npm test npm run coverage |

Jenkins Pipeline

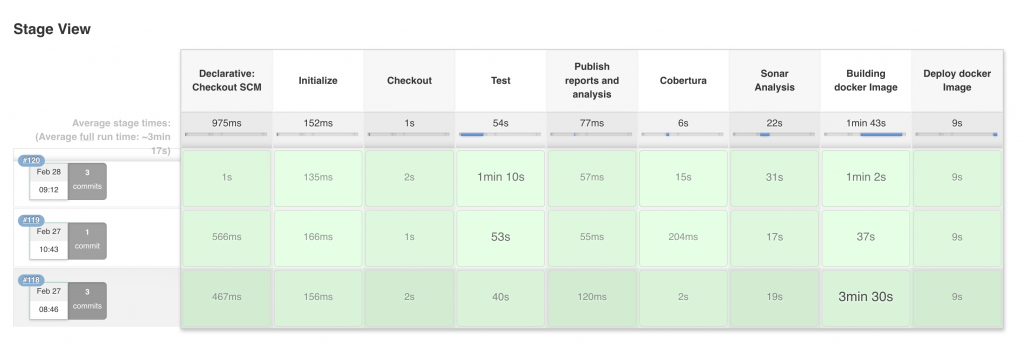

Once your testing has been setup, we will create deployment pipeline using Jenkins. Our pipeline will use Trunk Based Development (TBD) approach, therefore our versioning will combine build-date and git-sha. We will run the integration test by using side car container. Then we will publish the report, check in sonar whether our code quality pass, and lastly ship our application via Docker image.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 |

stages { stage("Initialize") { steps { script { echo "${BUILD_NUMBER} - ${env.BUILD_ID} on ${env.JENKINS_URL}" echo "JOB_NAME :: ${JOB_NAME}" echo "GIT_URL :: ${GIT_CODE_URL}" echo "GIT_BRANCH :: ${GIT_CODE_BRANCH}" } } } stage('Checkout') { steps { git branch: "${GIT_CODE_BRANCH}", url: "${GIT_CODE_URL}" script { VERSION = getDate() + '.' + getShortCommitHash() echo "VERSION :: ${VERSION}" } } } stage('Test') { steps { script{ docker.image('docker.elastic.co/elasticsearch/elasticsearch:7.6.1').withRun('-e discovery.type=single-node') { c -> docker.image('node:12').inside( "--link ${c.id}:elasticsearch -u 1001:1000 -e HOME=.") { sh 'until $(curl --output /dev/null --silent --head --fail http://elasticsearch:9200); do printf "."; sleep 1; done' sh 'npm install' sh 'npm run coverage' } } } } } stage('Publish reports and analysis') { parallel { stage('Cobertura') { steps { cobertura autoUpdateHealth: false, autoUpdateStability: false, coberturaReportFile: '**/coverage/cobertura-coverage.xml', conditionalCoverageTargets: '70, 0, 0', failUnhealthy: false, failUnstable: false, lineCoverageTargets: '80, 0, 0', maxNumberOfBuilds: 0, methodCoverageTargets: '80, 0, 0', onlyStable: false, sourceEncoding: 'ASCII', zoomCoverageChart: false } } stage('Sonar Analysis') { steps { script { docker.image('myregistry/sonar-scanner-cli:4.2.0').inside("-e SONAR_LOGIN=${SONAR_LOGIN}") { sh 'sonar-scanner -Dsonar.projectKey=${SONAR_PROJECT_KEY}' SONAR_RESULT = sh( script:''' curl -XGET -u ${SONAR_LOGIN}: https://your_sonar_url/api/qualitygates/project_status?projectKey=${SONAR_PROJECT_KEY} | jq -r .projectStatus.status ''', returnStdout: true ) echo "sonar analysis result: ${SONAR_RESULT}" if(!SONAR_RESULT.trim().equalsIgnoreCase('OK')) { currentBuild.result = "FAILURE" throw new Exception("Does not pass quality gateway") } } } } } } } stage('Building docker Image') { steps { script { myImage = docker.build("${registry}/${registryPath}/${IMAGE_NAME}:${VERSION}") } } } stage('Deploy docker Image') { steps{ script { docker.withRegistry('https://' + registry, registryCredential ) { myImage.push() myImage.push('latest') } } } } } |

Import your Jenkinsfile and start your build automation. You will see something like this.

Closure

That’s all from my side. The full source code are already on my github repository.